Confident AI versus LLM Labs

Confident AI

Ideal For

Assess the production readiness of LLM applications

Enhance LLM models through continuous monitoring

Manage datasets for efficiency

Integrate user feedback for improvements.

Key Strengths

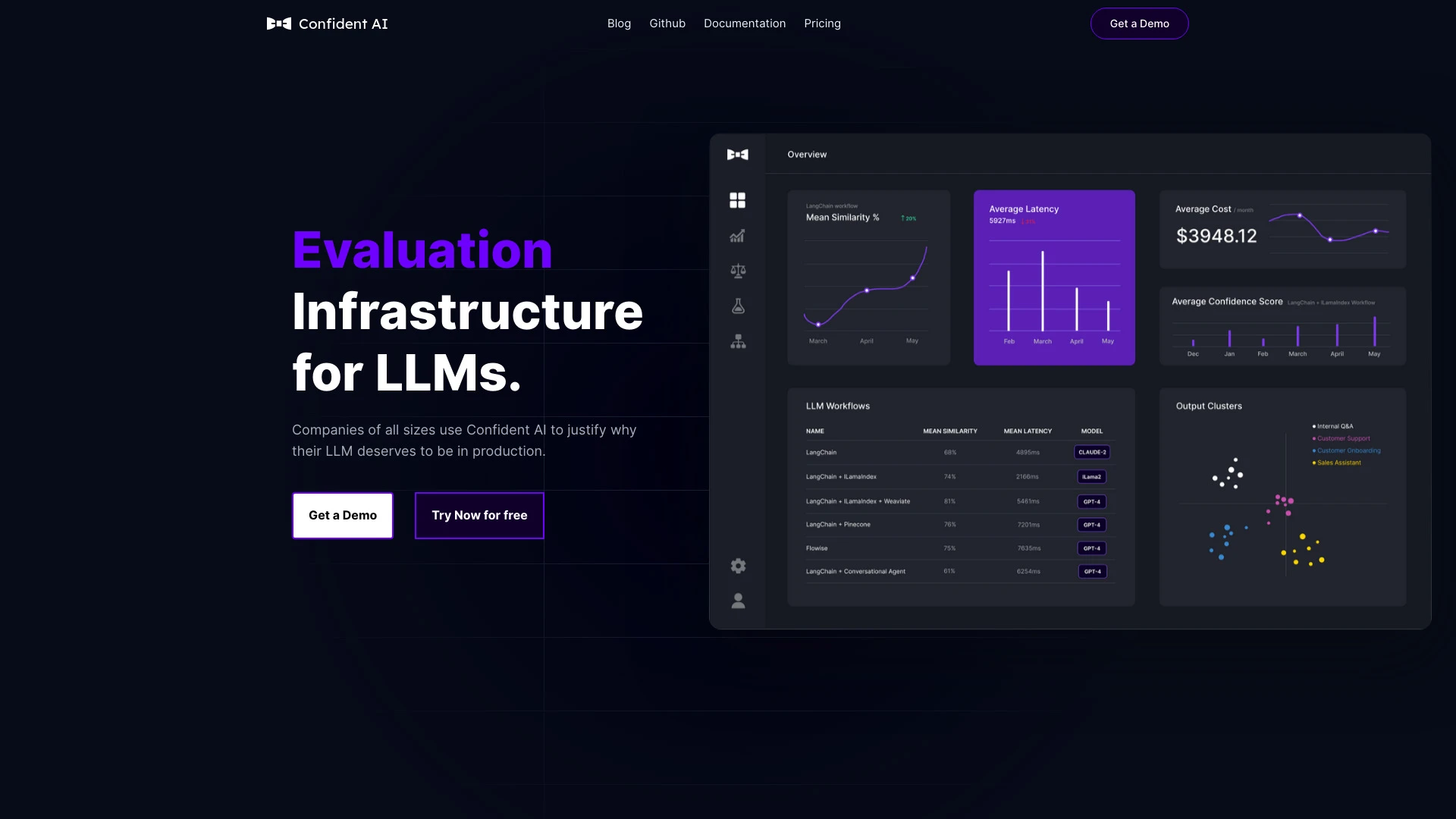

Comprehensive metrics for in-depth evaluation

Facilitates automatic improvements via human feedback

User-friendly interface for managing datasets

Core Features

14+ metrics for LLM experiments

Dataset management

Performance monitoring

Human feedback integration

Compatibility with DeepEval framework.

LLM Labs

Ideal For

Indie developers evaluating language models

AI enthusiasts testing new technologies

Researchers comparing model performance

Startups selecting language solutions

Key Strengths

Allows side-by-side comparisons

Saves time in model evaluation

Increases productivity for developers

Core Features

Simultaneous testing of multiple language models

Visual performance comparisons

User-friendly side-by-side interface

Detailed usability analysis

Easy sign-in with Google account.